How to host your application on a VPS for under 5$ /month

Discover How to Host Your Full-Stack Web Application for Under $5/Month

Are you tired of overpaying for web hosting? Imagine hosting your full-stack web application for less than the cost of a cup of coffee each month! In this article, I’ll reveal a step-by-step guide to hosting your application on a VPS for under $5 per month.

This article will go to the barebones manual steps for deploying the application.

Prerequisites:

- An account with Hetzner Cloud.

- A web application ready to deploy with docker files for building the images.

Source code:

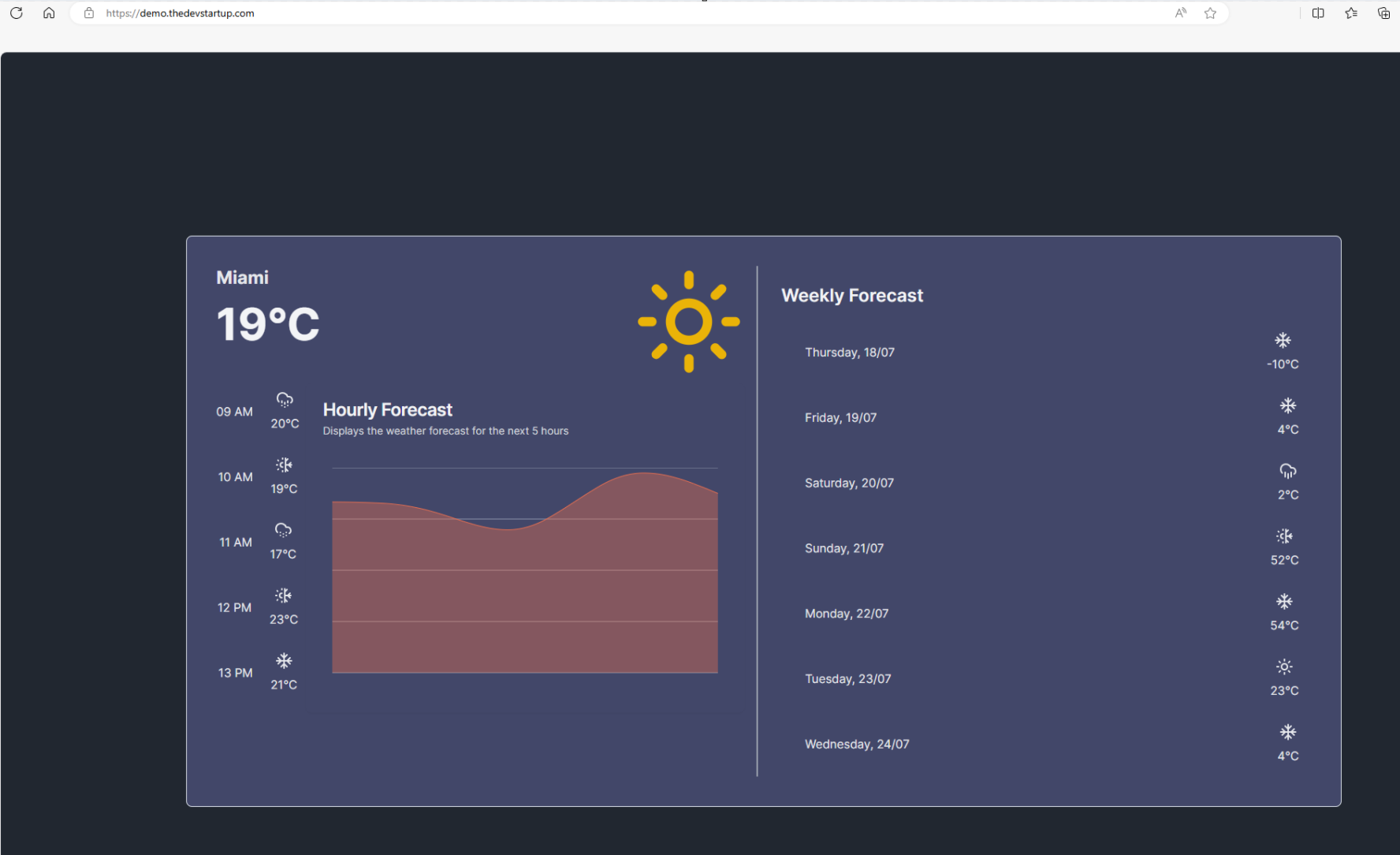

- You can find the demo application i have used for this article here.

What I will be deploying in this article

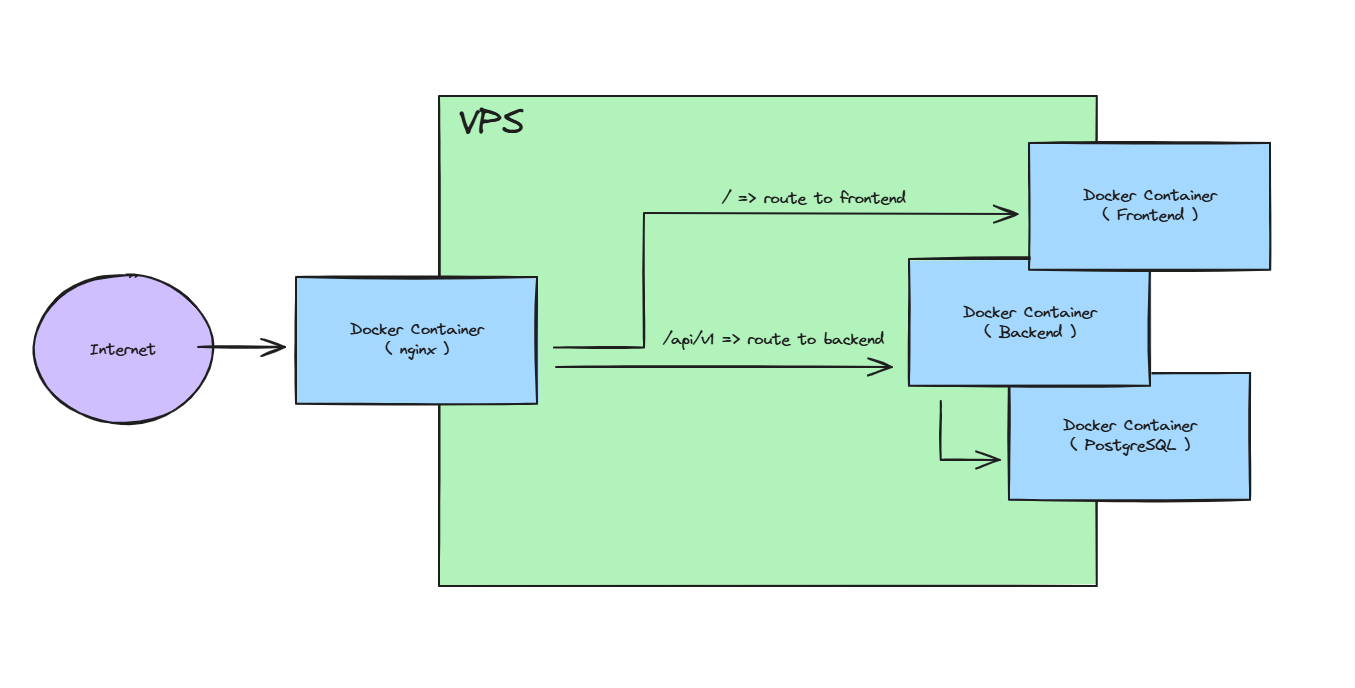

I will be deploying a single VPS with Docker installed. Then, I will deploy an Nginx container. This container will handle all traffic coming from the internet into my application and route it to either the frontend or the backend depending on the URL path. The Nginx container will also manage SSL encryption using a Let's Encrypt certificate. Lastly, I will deploy a PostgreSQL container for hosting my database and the Frontend + Backend containers.

Provision a VPS on Hetzner

Once you have an account on Hetzner you should be able to create a new Project, or maybe you already have a project, that's fine.

Inside your project create a New Server by following the settings below:

Location

- select a location. In my case i will chose Falkenstein, Germany.

Image

- Choose Ubuntu 22.04

Type

- Select Shared vCPU

- Select x86 Architecture

- I have chosen the SKU: CX22 with 2 vCPU's and 4GB ram

Networking

- I only select IPv4 - not IPv6.

SSH Keys

- Choose an SSH Key (If you don't know how to add an SSH key then this guide will explain everything you need to know: https://community.hetzner.com/tutorials/add-ssh-key-to-your-hetzner-cloud)

All other settings i will leave as empty or default and then click Create & Buy Now.

Once your server has provisioned you should be able to SSH into the server using the SSH Key you chose and the public IP address of the server.

ssh -i ~/.ssh/id_rsa root@<public-ip-address>Setup Dependencies on the Ubuntu system

To be able to deploy the application and proxy with docker we need to have docker and docker compose installed on the system. To do this run the following commands:

sudo apt update && sudo apt upgrade -yInstalling docker:

sudo apt-get update

sudo apt-get install ca-certificates curl

sudo install -m 0755 -d /etc/apt/keyrings

sudo curl -fsSL https://download.docker.com/linux/ubuntu/gpg -o /etc/apt/keyrings/docker.asc

sudo chmod a+r /etc/apt/keyrings/docker.ascecho \

"deb [arch=$(dpkg --print-architecture) signed-by=/etc/apt/keyrings/docker.asc] https://download.docker.com/linux/ubuntu \

$(. /etc/os-release && echo "$VERSION_CODENAME") stable" | \

sudo tee /etc/apt/sources.list.d/docker.list > /dev/null

sudo apt-get updatesudo apt-get install docker-ce docker-ce-cli containerd.io docker-buildx-plugin docker-compose-pluginInstalling docker compose

sudo curl -L "https://github.com/docker/compose/releases/download/1.29.2/docker-compose-$(uname -s)-$(uname -m)" -o /usr/local/bin/docker-compose

sudo chmod +x /usr/local/bin/docker-compose

docker-compose --versionyou should now be able to run the following commands and get an output of the version

docker --version && docker-compose --version

Explaining the docker-compose.yml file

In the root of my repository i have a docker-compose.yml file. If you are not familiar with docker compose i suggest you read the following documentation: https://docs.docker.com/compose/gettingstarted/. But basically it's just a descriptive way of what i want to build with docker containers.

In my compose file I'll have my frontend container described:

frontend:

container_name: frontend

build:

context: ./src/demoapp.frontend/

dockerfile: Dockerfile

env_file:

- path: ./configs/frontend.env

required: true

ports:

- "3000:3000"

networks:

- demo-networkThis tells docker where my Dockerfile is located, so it knows where to build the image from. It then tells where the file containing my environment variables such as database connection strings are located. And at last it tells docker that i want the container to be hosted on the docker virtual network: demo-network and it should map port 3000 external to port 3000 internal.

This is more or less the same for my backend container, just with some different values. The only extra thing here is that we have a depends_on: db. This tells docker that it cannot start the backend before the database is running. This avoids the backend starting up with no database available.

For the nginx container i will mount a volume. So i have an nginx configuration which i have located on the VPS and i want the nginx container to have access to this file. We are also mounting a volume in which we are storing the SSL certificates. Notice that i have commented out one of the volumes. This is only for the initial certificate request.

nginx:

image: nginx:latest

container_name: nginx

ports:

- "80:80"

- "443:443"

volumes:

- ~/nginx/letsencrypt:/etc/letsencrypt

- ~/nginx/webdata:/usr/share/nginx/html

- ./configs/temp-nginx.conf:/etc/nginx/conf.d/default.conf

# - ./configs/prod-nginx.conf:/etc/nginx/conf.d/default.conf

networks:

- demo-networkBesides the nginx container we will need to have certbot which is a tool for handling SSL certificates with Let's Encrypt. For this container we again will need to have two volumes for storing the certificates for the nginx container. This is the exact same volume as was mounted into the nginx container. This allows certbot to request certificates and place them inside a directory which nginx has access to.

certbot:

image: certbot/certbot

volumes:

- ~/nginx/letsencrypt:/etc/letsencrypt

- ~/nginx/webdata:/usr/share/nginx/htmlAt last i have my PostgreSQL container. Here again i will have two directories on my VPS and mount them inside the docker container. This will make all the data inside my database be persisted on the VPS.

db:

image: postgres:latest

container_name: db

environment:

POSTGRES_USER: postgres

POSTGRES_PASSWORD: postgres

ports:

- 5432:5432

volumes:

- ~/postgres/data:/var/lib/postgresql/data

- ~/postgres/backups:/backups

networks:

- demo-networkYou can find the full docker-compose.yml file here.

Deploying the Docker Compose

Deploying the docker containers on the VPS is very simple. I will start by cloning my repository to my VPS. This can be automated very easily with some simple scripts or tools, but here i will show the manual way to explain what happens.

You can either clone your own application or you can try with my sample application

git clone https://github.com/hoejsagerc/DemoFullstackApplication.gitNow before you deploy the application you might want to add some environment variables in the .env files located in the config/ directory. In my sample application already have them preconfigured, but incase you had any secrets you should not store them your repository. Once you have updated the .env files you need to add your domain to the config/temp-nginx.conf and prod-nginx.conf files. I have created comments to let you know every where you should change the domain name.

There are different tools for storing secrets for you docker compose without having the secrets in plaintext on your VPS, but this is beside the scope of this article.

You should now be ready to deploy your docker containers on the VPS. Run the following command from the root of the repository.

docker compose up -sDocker should now start pulling the PostgreSQL and nginx images, and afterwards it will start building your frontend and backend applications.

Once the command is completed you should see an output similar to below:

✔ Network demofullstackapplication_demo-network Created 0.1s

✔ Container frontend Started 0.8s

✔ Container nginx Started 0.7s

✔ Container backend Started 0.7s

✔ Container db Started You should now have a docker network running and 3 containers. But why only 3?. Well that's by design.

The nginx configuration is configured to use a certificate but we haven't configured any certificate yet, so therefore the config is not allowed.

Setup SSL with Let's Encrypt

For obtaining the certificates we will use certbot which have been defined inside the docker-compose.yml. But we will use it by running commands through the docker container. This allows us to use the certbot tool without have to install any dependencies or tools on the VPS.

We will start by running a dry-run to check that we can actually obtain a certificate. This is important to do since you have have so many certificate requests per hour with Let's Encrypt. So to avoid having to wait because you used all your tries we can do a dry-run.

Now run the following command - remember to replace <your-domain> with your actual domain name:

docker compose run --rm certbot certonly --webroot --webroot-path=/usr/share/nginx/html --dry-run -d demo.thedevstartup.comyou will be asked to enter some information like your email etc. and if the dry-run is successful you should see an output similar to:

The dry run was successful.

You can now run the live request by running the same command just without --dry-run

docker compose run --rm certbot certonly --webroot --webroot-path=/usr/share/nginx/html -d demo.thedevstartup.comand hopefully you should see in the output that the certificate was successfully received.

Automating certificate renewal with Certbot

Let's Encrypt certificates will expire after 90 days. So for automating this process we can setup a very simple cronjob. So inside the config/certbot-renew.crontab file you should replace the <path-to-docker-compose-fil> with the path to the docker-compose.yml

Then all you need to do is to run the following command

crontab certbot-renew.crontabThis cronjob will every night check for renewals, and if available then it will update the certificate for your web application. The cronjob will also log to the following file: /var/log/certbot.log

Deploying final Nginx Config

So all there is left to do now is to update the nginx config to make it force https and route to the correct containers.

inside the docker-compose.yml file under the nginx service you need replace the nginx.conf files

so comment out:

# - ./configs/temp-nginx.conf:/etc/nginx/conf.d/default.conf

and remove comment from:

- ./configs/prod-nginx.conf:/etc/nginx/conf.d/default.conf

then run

docker compose up -d --buildCheck that everything works and some conclusions

You should now be able to navigate to your web application and see that everything works.

So what did we learn ?:

- We provisioned a VPS hosted on Hetzner for under 5$ / month

- We installed the docker and docker compose

- We pulled the GitHub repository to the server and deployed the initial containers with docker compose

- We used certbot with Let's Encrypt for acquiring an SSL certificate for hosting our application with Https.

- At last we switched the Nginx configuration for forcing SSL and configuring the routes to our frontend and backend services.

What is the next step - and important things to think about

- So at the moment no security has been configured the VPS itself. So you should improve the security on the VPS by adding a firewall closing down who and how to SSH to the server. You should think about doing some standard security measures like creating a new user, locking down root and etc.

- At this stage there are no automation for handling updates to the web application. This can be done in a lot of different ways. You should definitely research different tools for handling this part.

- The PostgreSQL container at the moment has no backup configured. This can be handled by a simple cronjob and using the pg_dump and pg_restore commands. But if you are running this application in prod, i would suggest to use a cloud hosted managed database.